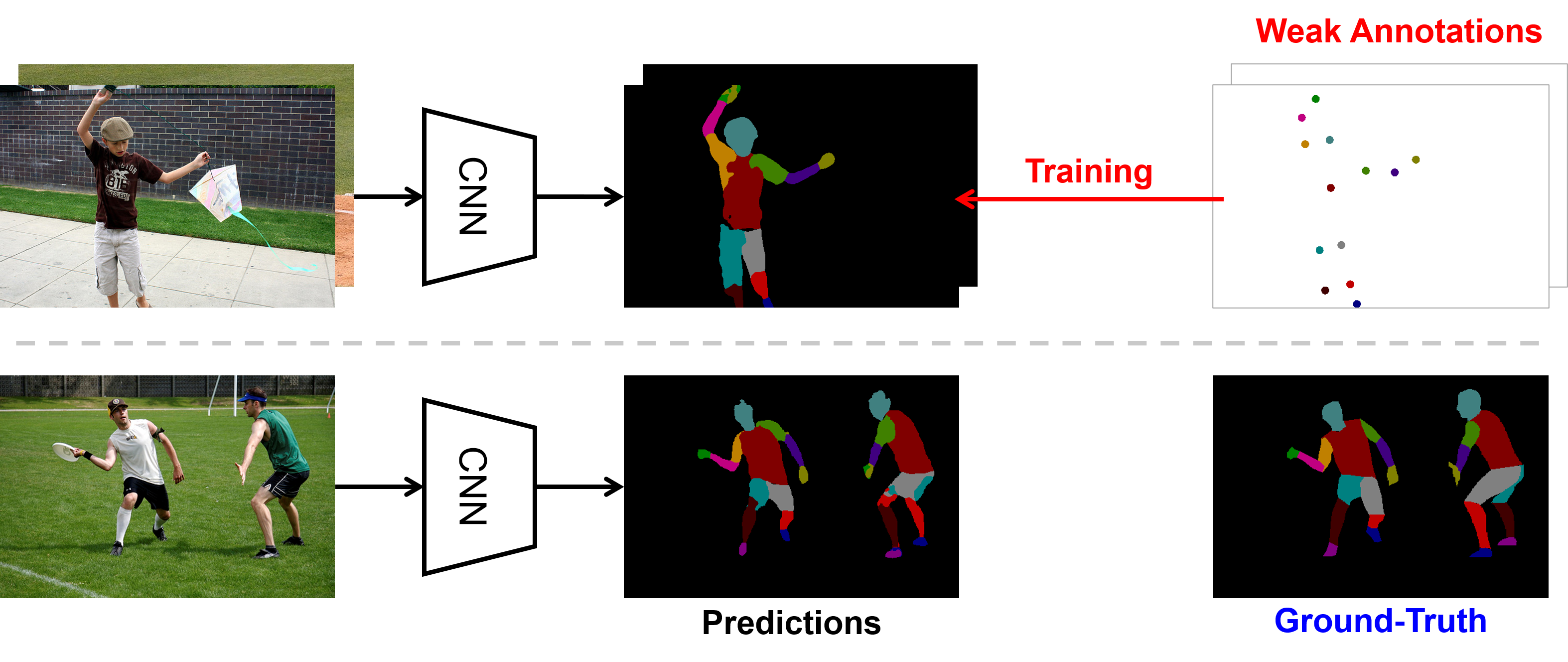

Matteo posted: " We consider a problem: Can a machine learn from a few labeled pixels to predict every pixel in a new image? This task is extremely challenging (see Fig. 1) as a single body part could contain visually distinctive areas (e.g. head consists of"

|

Subscribe to:

Post Comments (Atom)

Generate a catchy title for a collection of newfangled music by making it your own

Write a newfangled code fragment at an earlier stage to use it. Then call another method and make sure their input is the correct one. The s...

-

With the Ultimate Summer Treat!͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ...

-

admin posted: " [Download] Abdul Bari All Courses for Free Download - Google Drive Links [Abdul Bari] Learn Core JAV...

-

[New post] Fedwire Down , WEF , JP Morgan In Space, Digital Dollar , Ripple , CBDC & XRP Ledger v1.7Devin BARTON posted: " Protect Yourself From Hackers: Pure VPN Click Here: https://bit.ly/2OZlflH Get Your Own Unstoppa...

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.