| Hi, This week you'll learn about Scaling Kaggle Competitions Using XGBoost: Part 1.

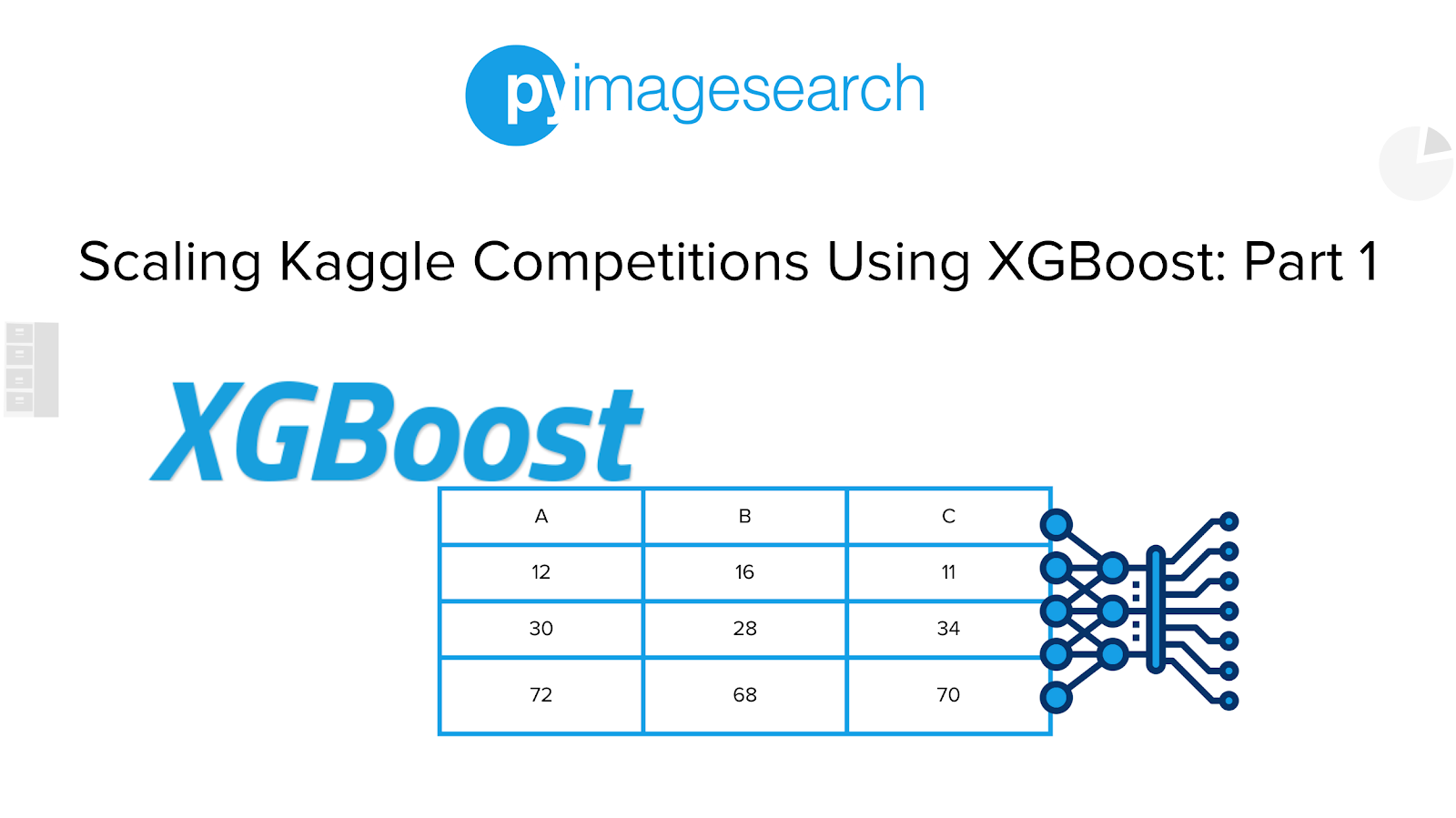

If you love the feeling of competing with other skilled individuals and love deep learning, we believe you will enjoy what we have brewing here in this tutorial series. Kaggle has been a go-to for all deep learning practitioners, old and new alike. We have all spent innumerable hours finding datasets and notebooks to help further our understanding of the subject. Naturally, at some point, most of us have been lured by the shine of Kaggle competitions. However, the whole process of tackling a problem set, understanding the data, and figuring out a good solution alone or with a team can be a beautiful (sometimes addictive) experience. In today's blog post, we introduce you to XGBoost, an algorithm that is deceptively simple to use and has been a part of many winning solutions for Kaggle competitions. Through this, we aim to create an easy transition to the world of competitive kaggling for our readers. The big picture: XGBoost, in itself, is an algorithm that utilizes efficient, optimized gradient boosting. We showcase how easy it is to simply plug into our problem statement with a few prerequisites. How it works: Kaggle competitions can be a tough nut to crack and have become an informal yet significant part of becoming a better deep learning researcher. Therefore, while we also aim to provide a proper step-by-step guideline on tackling Kaggle problems, this introductory blog post serves as a basic foundation to understand XGBoost and its prerequisites (e.g., understanding decision trees). Our thoughts: While you can simply plug XGBoost into your project, we firmly believe that it's important to understand the math and intuition behind basic concepts like decision trees. We should strive to ensure machine learning models are never called black boxes! Yes, but: Don't get disheartened if the math gets subsequently a bit too tough. Understanding the intuition behind these algorithms can also get you far enough to figure out the knobs and bolts which run them. Stay smart: And stay tuned for our upcoming blog posts! Click here to read the full tutorial Do You Have an OpenCV Project in Mind? You can instantly access all of the code for Scaling Kaggle Competitions Using XGBoost: Part 1, along with courses on TensorFlow, PyTorch, Keras, and OpenCV by joining PyImageSearch University. Guaranteed Results: If you haven't accomplished your Computer Vision/Deep Learning goals, let us know within 30 days of purchase and get a full refund. Do You Have an OpenCV Project in Mind? Your PyImageSearch Team |

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.