Hello trend,

This is Satya Mallick from LearnOpenCV.com. This email needs a summary.

Summary

- Today's Post :

- Human Pose Estimation using Keypoint-RCNN

- Code: PyTorch-Keypoint-RCNN

- See the next section for description of keypoint detectors, and RCNN family.

- Missed our Kickstarter campaign? Try Indiegogo!

- OpenCV Weekly Webinar

- Guest : Katherine Scott from Open Robotics

- Topic : All about Robot Operating System (ROS)

- Date and Time : June 24, 9 AM Pacific Time

- Registration : Free Registration Link.

- Previous episode : How we test models at Modelplace.AI

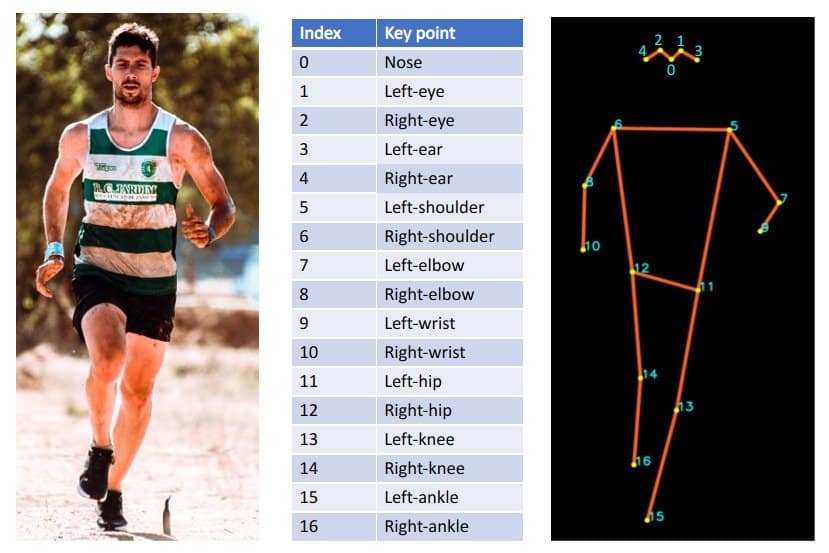

Human Pose Estimation using Keypoint RCNN

Today, we have an epic post on Human Pose Estimation using Keypoint-RCNN. That's a mouthful. Let's break it down and make it more palatable.

What is a keypoint detector?

A keypoint detector finds important (key) points on an object. For example, you can train it to be a human pose estimator, and it will output the location of specific points on the human body. You can also train it for facial landmark detection or for hand pose detection. We have also covered human pose estimation, facial landmark detection, and hand pose estimator previously based on other architectures.

What is RCNN?

RCNN is an acronym for Regions with Convolutional Neural Network features. RCNN was first used for object detection back in 2013, about 800 years in AI timeline.

RCNN was challenging to train, and it was SLOW.

You could make yourself a cup of coffee as it detected objects on a single image using a GPU. And in case you did not have a GPU on your machine, you could enjoy your lunch!

Jokes aside, the primary author of the RCNN paper, Ross Girshick, has made enormous contributions to the field.

Fast, Faster, Mask, and Keypoint RCNN

Girshick's following paper in the series was titled, Fast RCNN. It was 213 times faster and more accurate than RCNN.

But why stop at fast? Faster RCNN, Girshick's subsequent work, was a massive step toward real-time object detection. It also produced state-of-the-art accuracy.

Next, they came up with Fastest RCNN. I'm kidding!

What Faster R-CNN did provide was a framework to solve other problems in computer vision that, on the surface, have nothing to do with object detection.

With Mask RCNN, they extended the ideas in Faster R-CNN to solve the instance segmentation problem.

And the same ideas with minor modifications are implemented in Keypoint RCNN for solving the keypoint estimation problem.

OpenCV Courses Now On Indiegogo InDemand

If you want to walk fast, walk alone. If you want to go far, walk together.

Our 1522 backers helped us raise an astounding $238,283 during the Kickstarter campaign.

We thank you all from the bottom of our hearts for your support, encouragement, and the trust you have placed in us.

It means the world to us!

We have signed a social contract with you to bring the best courses in computer vision and AI to the world, and OpenCV Course Team is now entirely focused on delivering the rewards of this Kickstarter campaign.

On the other hand, our marketing team had a brilliant idea. We have moved the campaign to Indiegogo InDemand to give people who missed out on our Kickstarter campaign an opportunity to buy our courses at discounted prices which are only slightly higher than the Kickstarter campaign price. Still pretty darn good!

The new rewards unlocked when stretch goals are hit during the Indiegogo campaign will be passed along to the Kickstarter backers as well.

It's a win-win for everyone. Let's go!

OpenCV Courses : Indiegogo Campaign

Thanks again,

Satya

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.